The rise of Artificial Intelligence presents a pivotal moment in human history. Its transformative power is undeniable, promising to reshape industries, societies, and even our understanding of intelligence itself. However, as this power becomes increasingly concentrated in the hands of a few dominant tech corporations, a troubling specter emerges: "digital feudalism." This is a future where access to essential AI capabilities, and thus to economic and societal participation, is controlled by a new class of technological overlords. This article explores this looming risk and champions the vital role of open-source AI as a bulwark, a critical force for fostering democratic access, distributed innovation, and a more equitable technological future.

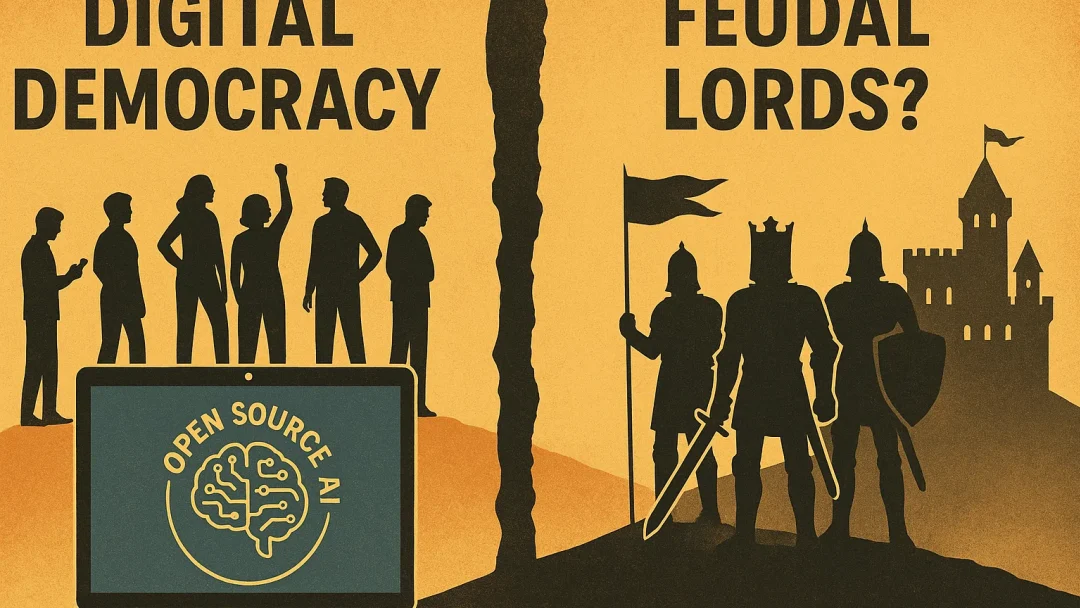

Digital Democracy or Feudal Lords? Open Source AI as a Bulwark

Artificial Intelligence is not merely another technological advancement; it represents a fundamental shift in how information is processed, decisions are made, and value is created. The companies at the forefront of AI development, often referred to as "Big Tech," are investing billions to create increasingly sophisticated models. While these innovations are remarkable, the prevailing business models—characterized by proprietary systems, expensive access tiers, and closed ecosystems—threaten to create a new societal hierarchy.

In this scenario, a few powerful entities control the "means of intelligence production," much like feudal lords controlled land and resources in centuries past. Individuals, smaller businesses, researchers, and even entire nations could become dependent "digital serfs," reliant on these tech giants for access to the AI tools essential for progress and participation in the modern world. This isn't hyperbole; it's a tangible risk if current trends continue unchecked. The question before us is stark: Will AI usher in an era of broader digital democracy, or will it solidify a new form of digital feudalism?

Understanding Digital Feudalism

The term "digital feudalism" describes a socio-economic structure where power is highly concentrated in the hands of a few entities that control essential digital infrastructure and platforms. In the context of AI:

- Control of Foundational Models: A small number of large corporations are developing the most powerful "foundation models" or "frontier models." These models, trained on vast datasets and requiring immense computational resources, become the underlying infrastructure for a wide array of AI applications.

- Access as a Tollgate: Access to the full capabilities of these models is often granted through restrictive licenses, high subscription fees, or API usage costs that can be prohibitive for many. This effectively creates tollgates to the most advanced AI.

- Data Moats: These corporations often have exclusive access to massive datasets, which are crucial for training cutting-edge AI. This creates a "data moat" that makes it difficult for new players to compete, further centralizing power.

- Dependence and Lack of Control: Users become dependent on these proprietary platforms. They may have limited control over the AI's functioning, its embedded biases, how their data is used, or future changes in pricing and access policies. Innovation itself can become permissioned, requiring the approval or alignment with the goals of the platform owners.

- Economic Disparity: Those who control the AI "fiefdoms" reap disproportionate economic benefits, while those who are merely "users" or "renters" of AI services may see their economic agency diminished. This widens the gap between the AI haves and have-nots.

This isn't a deliberate conspiracy, but rather the potential outcome of market dynamics, network effects, and the immense capital investment required for cutting-edge AI development when not balanced by countervailing forces.

The Dangers of Concentrated AI Power

A future dominated by digital feudalism poses significant risks to democratic values, equitable progress, and individual autonomy.

- Stifled Innovation: While Big Tech labs are highly innovative, true innovation thrives in a diverse ecosystem. If smaller companies, independent researchers, and non-profits cannot access or build upon foundational AI models without exorbitant costs or restrictive terms, a vast wellspring of creativity and problem-solving could be lost. Innovation becomes concentrated and permissioned.

- Increased Inequality: As discussed in previous articles (like "The High Cost of Power"), restricted access to powerful AI tools will inevitably exacerbate economic inequalities. The productivity gains and wealth generated by AI will flow primarily to those who own and control the technology.

- Erosion of Democratic Oversight: Decisions made by AI systems can have profound societal impacts (e.g., in loan applications, hiring, criminal justice, news dissemination). If these systems are opaque, proprietary "black boxes" controlled by a few, it becomes incredibly difficult for democratic institutions or the public to scrutinize them, understand their biases, or ensure accountability.

- Reduced Autonomy: Individuals and organizations may find their choices and capabilities increasingly constrained by the platforms and algorithms they depend on. This can lead to a loss of autonomy and agency in both economic and personal spheres.

- Risk of Algorithmic Bias at Scale: AI models learn from data, and if that data reflects existing societal biases (around race, gender, socio-economic status, etc.), the AI can perpetuate and even amplify these biases. When such models are controlled by a few and deployed globally, these biases can become embedded at an unprecedented scale, with limited avenues for redress.

- Geopolitical Implications: Nations lacking indigenous AI capabilities could become technologically dependent on, and potentially subservient to, the countries or corporations that control advanced AI. This has implications for national sovereignty and global power balances.

Open Source AI: A Democratic Counterbalance

In the face of these challenges, the open-source AI movement emerges as a critical bulwark against digital feudalism. Open source, in this context, generally refers to AI models, tools, and datasets that are made publicly available, allowing anyone to use, study, modify, and distribute them (often with certain licensing conditions that promote continued openness).

How Open Source AI Promotes Digital Democracy:

-

Democratizing Access:

- Lowering Cost Barriers: Open-source AI models are typically free to access and use, though users might still incur computational costs to run or fine-tune them. This dramatically lowers the financial barrier to entry compared to expensive proprietary systems.

- Global Availability: Open-source resources can be accessed by anyone with an internet connection, regardless of their geographic location or institutional affiliation, helping to bridge the AI divide between developed and developing nations.

-

Fostering Distributed Innovation:

- Building on Shoulders of Giants (Legally): Open source allows researchers, startups, and individual developers to build upon existing advanced models, rather than having to recreate them from scratch. This accelerates innovation.

- Permissionless Creativity: Innovators can experiment and adapt open-source models for a vast array of applications without needing permission from a central authority or being locked into a specific vendor's ecosystem.

- Niche Applications: Open source facilitates the development of AI solutions tailored to specific community needs or niche markets that might be overlooked by large corporations focused on mass-market applications.

-

Enhancing Transparency and Accountability:

- Scrutinizing Models: When model architectures, code, and sometimes even training data are open, they can be scrutinized by a global community of researchers and developers. This helps in identifying biases, security vulnerabilities, and limitations.

- Public Understanding: Openness contributes to a better public understanding of how AI systems work, demystifying the technology and enabling more informed societal conversations about its use.

- Reproducibility: Open-source models and datasets allow for reproducible research, a cornerstone of scientific progress.

-

Reducing Vendor Lock-In and Promoting Competition:

- Alternatives to Proprietary Systems: A healthy open-source AI ecosystem provides viable alternatives to the offerings of a few dominant tech companies, preventing excessive market concentration.

- Interoperability: Open standards and models can promote greater interoperability between different AI systems and tools.

-

Empowering Education and Research:

- Hands-on Learning: Students and researchers gain invaluable experience by working directly with open-source models and tools.

- Academic Collaboration: Open source facilitates collaboration among academic institutions worldwide, pooling talent and resources.

Examples of impactful open-source AI initiatives include models like Meta's Llama series (though with some usage restrictions on the largest versions, it spurred much open development), Stability AI's Stable Diffusion, BLOOM by BigScience, and the multitude of models and tools available on platforms like Hugging Face.

A Christian Perspective: Stewardship, Justice, and the Common Good in the Digital Age

The principles underlying the open-source movement resonate deeply with Christian ethics.

- Stewardship for the Common Good: If AI capabilities are, in part, a fruit of collective human knowledge and publicly funded research (as much foundational computer science research has been), then a purely proprietary approach that excessively restricts access can be seen as poor stewardship. Openness can be a way of stewarding these powerful tools for the benefit of all of God's creation.

- Promoting Justice and Equity: Open source, by lowering access barriers, aligns with the biblical call to uplift the marginalized and ensure that the benefits of powerful new technologies don't only accrue to the already powerful and wealthy. It offers a mechanism to counteract the centralizing tendencies that can lead to injustice.

- Valuing Truth and Transparency: The transparency inherent in many open-source projects aligns with the Christian value of truth. The ability to inspect and understand AI models, rather than treating them as inscrutable black boxes, is crucial for ethical assessment and accountability.

- Fostering Community and Collaboration: The collaborative nature of the open-source movement mirrors the biblical emphasis on community (koinonia) and using diverse gifts for the common good (1 Corinthians 12:7).

While open source is not a panacea and comes with its own challenges (e.g., funding, potential for misuse of open models, governance of large projects), it represents a powerful and ethically aligned pathway toward a more democratic and equitable AI future.

Challenges and the Path Forward for Open Source AI

Despite its immense promise, the open-source AI movement faces hurdles:

- Resource Intensiveness: Developing and training state-of-the-art foundation models requires enormous computational resources and highly specialized talent, which are more readily available to large corporations.

- Data Access: Access to diverse, high-quality datasets for training can be a challenge for open-source projects compared to tech giants with vast proprietary data.

- Potential for Misuse: Openly available powerful models could potentially be misused by malicious actors. This necessitates ongoing research into AI safety and ethical safeguards that can be integrated into open models.

- Sustainability and Governance: Finding sustainable funding models and effective governance structures for large-scale open-source AI projects is crucial.

Addressing these challenges requires concerted effort:

- Public and Philanthropic Funding: Increased investment from governments, foundations, and individuals in open-source AI research and infrastructure.

- Collaborative Initiatives: Partnerships between academia, industry (even Big Tech can contribute to open source, as some do), and non-profits.

- Ethical Licensing and Safeguards: Developing licensing frameworks and technical safeguards for open-source models that encourage responsible use and mitigate risks.

- Democratizing Compute: Initiatives to provide more affordable access to the computational power needed for AI development and deployment.

- Global Cooperation: Fostering international collaboration on open and ethical AI development to ensure benefits are shared globally.

Conclusion: Choosing Democracy Over Feudalism in the Age of AI

The path that AI development takes is not predetermined. It will be shaped by the choices we make today—as developers, policymakers, investors, educators, and citizens. The allure of proprietary control and the market forces driving towards centralization are strong. However, the vision of an open, democratic, and equitable AI future, championed by the open-source movement, offers a compelling and ethically sound alternative.

Allowing AI to become a new form of digital feudalism, where a few "lords" control the essential tools of the modern age, would be a profound failure of stewardship and a betrayal of the technology's potential to uplift all of humanity. Open-source AI provides a vital counter-narrative and a practical pathway toward ensuring that AI power is distributed more broadly, that innovation is permissionless and diverse, and that the benefits of this transformative technology contribute to the common good.

From a Christian perspective, embracing and supporting the principles of openness, transparency, and equitable access inherent in the open-source movement is not merely a pragmatic choice; it is an ethical imperative. It is about actively working to shape a future where technology serves humanity justly, reflecting God's desire for shalom and flourishing for all. The choice between digital democracy and digital feudalism is before us. Let us choose wisely.

FAQs

Q1: If AI models are open source, won't that make it easier for bad actors to misuse them for harmful purposes? A1: This is a valid and significant concern. The open availability of powerful AI models does carry risks of misuse (e.g., for generating misinformation, creating malicious code, or other harmful applications). The open-source AI community is actively grappling with this through: _ Responsible Release Practices: Some organizations release models with built-in safety filters or in stages, studying potential risks. _ AI Safety Research: Developing techniques to make models inherently safer and less prone to misuse. _ Ethical Licensing: Exploring licenses that might restrict certain harmful uses while keeping the model broadly available for beneficial ones. _ Community Monitoring: The transparency of open source also means more eyes can scrutinize models for vulnerabilities and potential misuses. It's a balance between the benefits of openness and mitigating risks, and an area of ongoing research and debate.

Q2: Can open-source AI truly compete with the massive R&D budgets of Big Tech companies? A2: It's a David-and-Goliath situation in terms of direct financial resources. However, open source has other strengths: _ Global Talent Pool: It can draw on the creativity and expertise of a worldwide community of developers and researchers. _ Rapid Iteration and Adaptation: Open-source projects can often adapt and evolve quickly. _ Focus on Specific Needs: Open-source efforts can create highly effective models for niche applications that Big Tech might ignore. _ Collaboration: Collaborative funding models, academic consortia, and even contributions from industry players who also benefit from a healthy open-source ecosystem can help level the playing field. While matching the sheer scale of the largest proprietary models is difficult, open source can provide powerful, accessible, and often more transparent alternatives.

Q3: What can individuals do to support open-source AI and a more democratic AI future? A3: Individuals can: _ Educate Themselves: Learn about open-source AI initiatives and their importance. _ Advocate: Speak up in their workplaces, communities, and online about the need for open and ethical AI. _ Support Open Source Projects: If you have technical skills, contribute to open-source AI projects. If not, consider donating to organizations that support open-source AI development or AI ethics research. _ Use and Promote Open Tools: Where appropriate, choose open-source AI tools and encourage others to explore them. * Engage in Policy Discussions: Support policies that promote transparency, accountability, and public investment in open AI research and infrastructure.